Search This Blog

Wednesday, May 2, 2012

Wednesday, April 18, 2012

grant table privileges

DECLARE

BEGIN

FOR x in (SELECT owner, table_name FROM all_tables WHERE OWNER IN('user1'))

LOOP

EXECUTE IMMEDIATE 'GRANT SELECT ON ' || x.owner || '.' || x.table_name || ' TO user2';

EXECUTE IMMEDIATE 'GRANT INSERT ON ' || x.owner || '.' || x.table_name || ' TO user2';

EXECUTE IMMEDIATE 'GRANT UPDATE ON ' || x.owner || '.' || x.table_name || ' TO user2';

EXECUTE IMMEDIATE 'GRANT DELETE ON ' || x.owner || '.' || x.table_name || ' TO user2';

EXECUTE IMMEDIATE 'GRANT ALTER ON ' || x.owner || '.' || x.table_name || ' TO user2';

EXECUTE IMMEDIATE 'GRANT INDEX ON ' || x.owner || '.' || x.table_name || ' TO user2';

EXECUTE IMMEDIATE 'GRANT REFERENCES ON ' || x.owner || '.' || x.table_name || ' TO user2';

END LOOP;

END;

BEGIN

FOR x in (SELECT owner, table_name FROM all_tables WHERE OWNER IN('user1'))

LOOP

EXECUTE IMMEDIATE 'GRANT SELECT ON ' || x.owner || '.' || x.table_name || ' TO user2';

EXECUTE IMMEDIATE 'GRANT INSERT ON ' || x.owner || '.' || x.table_name || ' TO user2';

EXECUTE IMMEDIATE 'GRANT UPDATE ON ' || x.owner || '.' || x.table_name || ' TO user2';

EXECUTE IMMEDIATE 'GRANT DELETE ON ' || x.owner || '.' || x.table_name || ' TO user2';

EXECUTE IMMEDIATE 'GRANT ALTER ON ' || x.owner || '.' || x.table_name || ' TO user2';

EXECUTE IMMEDIATE 'GRANT INDEX ON ' || x.owner || '.' || x.table_name || ' TO user2';

EXECUTE IMMEDIATE 'GRANT REFERENCES ON ' || x.owner || '.' || x.table_name || ' TO user2';

END LOOP;

END;

Tuesday, April 3, 2012

Parameters in impdp and not in expdp

PARTITION_OPTIONS

REMAP_DATAFILE

REMAP_DATAFILE

REMAP_SCHEMA

REMAP_TABLE

REMAP_TABLESPACE

REUSE_DATAFILES

SKIP_UNUSABLE_INDEXES

SQLFILE

STREAMS_CONFIGURATION

TABLE_EXISTS_ACTION

TARGET_EDITION

TRANSFORM

TRANSPORT_DATAFILES

REMAP_DATAFILE

REMAP_DATAFILE

REMAP_SCHEMA

REMAP_TABLE

REMAP_TABLESPACE

REUSE_DATAFILES

SKIP_UNUSABLE_INDEXES

SQLFILE

STREAMS_CONFIGURATION

TABLE_EXISTS_ACTION

TARGET_EDITION

TRANSFORM

TRANSPORT_DATAFILES

Parameters in expdp and not in impdp

COMPRESSION

ENCRYPTION

ENCRYPTION_ALGORITHM

ENCRYPTION_MODE

ESTIMATE_ONLY

FILESIZE

REUSE_DUMPFILES

SAMPLE

ADD_FILE

FILESIZE

ENCRYPTION

ENCRYPTION_ALGORITHM

ENCRYPTION_MODE

ESTIMATE_ONLY

FILESIZE

REUSE_DUMPFILES

SAMPLE

ADD_FILE

FILESIZE

Monday, April 2, 2012

remove old files in a directory

find location -mtime +5 -exec rm {} \;

removes files which are older than 5 days.

removes files which are older than 5 days.

Thursday, March 29, 2012

Create automatic user

CREATE USER &USERNAME IDENTIFIED BY &PASSWORD

DEFAULT TABLESPACE USERS TEMPORARY TABLESPACE TEMP

QUOTA UNLIMITED ON USERS

PROFILE DEFAULT

ACCOUNT UNLOCK;

DEFAULT TABLESPACE USERS TEMPORARY TABLESPACE TEMP

QUOTA UNLIMITED ON USERS

PROFILE DEFAULT

ACCOUNT UNLOCK;

Extract a schema

set pages 500

set lines 200

set long 100000

select dbms_metadata.get_ddl( 'USER', 'AIRTEL' ) from dual

UNION ALL

select dbms_metadata.get_granted_ddl( 'SYSTEM_GRANT', 'AIRTEL' ) from dual

UNION ALL

select dbms_metadata.get_granted_ddl( 'OBJECT_GRANT', 'AIRTEL' ) from dual

UNION ALL

select dbms_metadata.get_granted_ddl( 'ROLE_GRANT', 'AIRTEL' ) from dual;

set lines 200

set long 100000

select dbms_metadata.get_ddl( 'USER', 'AIRTEL' ) from dual

UNION ALL

select dbms_metadata.get_granted_ddl( 'SYSTEM_GRANT', 'AIRTEL' ) from dual

UNION ALL

select dbms_metadata.get_granted_ddl( 'OBJECT_GRANT', 'AIRTEL' ) from dual

UNION ALL

select dbms_metadata.get_granted_ddl( 'ROLE_GRANT', 'AIRTEL' ) from dual;

Wednesday, March 28, 2012

nslookup

this command is used to get the server name if we know the ip address.

$ nslookup ###.##.##.##

$ nslookup ###.##.##.##

Monday, March 26, 2012

drop a database

This is in 11G

------------------------------

shutdown abort;

startup mount exclusive restrict;

drop database;

exit

------------------------------

shutdown abort;

startup mount exclusive restrict;

drop database;

exit

Wednesday, March 21, 2012

Monday, March 19, 2012

listener file location

By default, the listener file will be located at $ORACLE_HOME/network/admin. If it is not there, then issue the command, lsnrctl status. This will give you the exact location of the listener file.

pfile/spfile contents

Move the existing init file present in $ORACLE_HOME/dbs folder to *.old.

SQL> show parameter spfile;

If it shows the output, then do a "create pfile from spfile" and the pfile will be created from the spfile in the location $ORACLE_HOME/dbs folder. Extract the content of the pfile.

If the output shows pfile, then directly go to $ORACLE_HOME/dbs folder and get the content of the file.

SQL> show parameter spfile;

If it shows the output, then do a "create pfile from spfile" and the pfile will be created from the spfile in the location $ORACLE_HOME/dbs folder. Extract the content of the pfile.

If the output shows pfile, then directly go to $ORACLE_HOME/dbs folder and get the content of the file.

Thursday, March 15, 2012

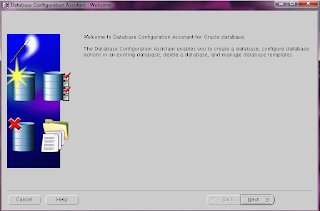

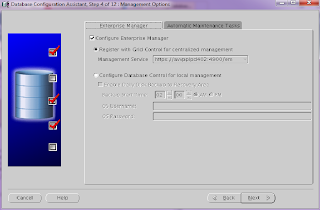

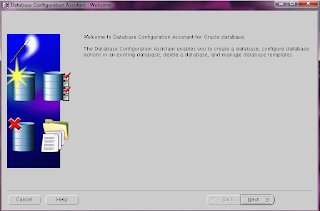

Create a database

Pre-requisite : Make sure Exceed is running in the background.

export ORACLE_SID=

export ORACLE_HOME=

export DISPLAY=ip address(get it from the command prompt using the command ipconfig):0.0

Check whether xterm is working or not..

In AIX, go to location /usr/bin/X11 and type xterm.. you should be able to see a small window pop up

In Linux, /usr/X11R6/bin.

In Sun Solaris, /usr/openwin/bin.

In any location, type dbca

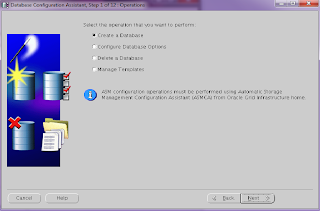

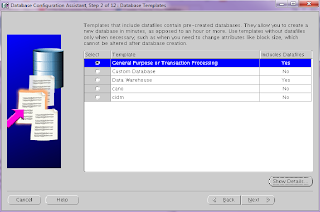

Click "next"

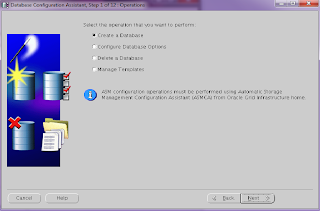

Click "Create a database"

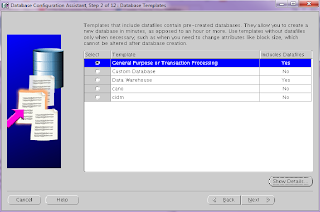

Click "General Purpose or Transaction Processing" and click "next"

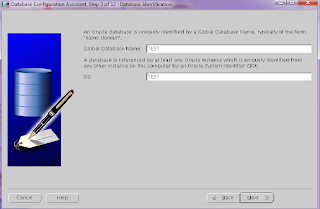

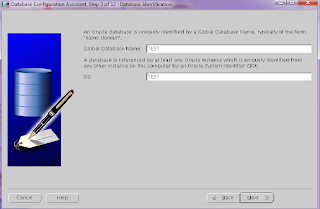

Enter the global database name and SID and click "next"

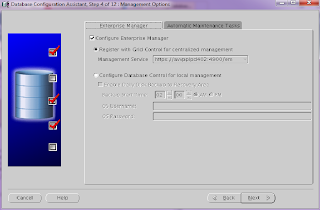

Click "next"

Enter the passwords and click "next"

Select storage type as "ASM" or "Filesystem"

Select "use Oracle Managed Files" and select the available disk group.

click "next"

It will ask for the ASMSNMP password specific to ASM; give the password.

click "ok"

Choose the recovery options for the database: skip and click "next"

click on "sample schemas" and click "next"

Initialization parameters:just click on "typical"

tick "automatic memory management"

click "next"

Database storage: skip and click "next"

Select the database creation options

Select "Create a database"

Click "finish"

It will ask for confirmation. Click "ok"

You can see the progress bar simultaneously while the database is getting created.

You can skip "Password Management" and click "exit"

now you will be able to see the database added. do a ps -ef | grep pmon

I guess by default , the database size will be 2 GB.

If you want to increase the database size, then increase the size of the datafiles or make the datafiles autoextend on.

export ORACLE_SID=

export ORACLE_HOME=

export DISPLAY=ip address(get it from the command prompt using the command ipconfig):0.0

Check whether xterm is working or not..

In AIX, go to location /usr/bin/X11 and type xterm.. you should be able to see a small window pop up

In Linux, /usr/X11R6/bin.

In Sun Solaris, /usr/openwin/bin.

In any location, type dbca

Click "next"

Click "Create a database"

Click "General Purpose or Transaction Processing" and click "next"

Enter the global database name and SID and click "next"

Click "next"

Enter the passwords and click "next"

Select storage type as "ASM" or "Filesystem"

Select "use Oracle Managed Files" and select the available disk group.

click "next"

It will ask for the ASMSNMP password specific to ASM; give the password.

click "ok"

Choose the recovery options for the database: skip and click "next"

click on "sample schemas" and click "next"

Initialization parameters:just click on "typical"

tick "automatic memory management"

click "next"

Database storage: skip and click "next"

Select the database creation options

Select "Create a database"

Click "finish"

It will ask for confirmation. Click "ok"

You can see the progress bar simultaneously while the database is getting created.

You can skip "Password Management" and click "exit"

now you will be able to see the database added. do a ps -ef | grep pmon

I guess by default , the database size will be 2 GB.

If you want to increase the database size, then increase the size of the datafiles or make the datafiles autoextend on.

Tuesday, March 13, 2012

schema export/import using datapump (schema names and tablespace names are same)

check the schema size

check whether you have enough space in the filesystem in which you are going to perform the export

export parameters

------------------------

schemas, directory, logfile, dumpfile

transfer the dump file using scp from source to the destination database

make sure the files have the 700 privilege

drop and create the schema in the destination database

make sure whether you have sufficient space in the database and in the filesystem

import parameters

-------------------------

schemas, directory, logfile, dumpfile

check whether you have enough space in the filesystem in which you are going to perform the export

export parameters

------------------------

schemas, directory, logfile, dumpfile

transfer the dump file using scp from source to the destination database

make sure the files have the 700 privilege

drop and create the schema in the destination database

make sure whether you have sufficient space in the database and in the filesystem

import parameters

-------------------------

schemas, directory, logfile, dumpfile

Monday, March 12, 2012

Disk I/O

In AWR, check for the columns Tablespace I/O stats and File I/O stats.

In Tablespace I/O, see the avg(ms). If it is > 20, we may have to take some initiative.

In File I/O stats, if the Reads column shows more value, then we can try to change the mount point.

In Tablespace I/O, see the avg(ms). If it is > 20, we may have to take some initiative.

In File I/O stats, if the Reads column shows more value, then we can try to change the mount point.

Friday, March 9, 2012

create a new service

exec dbms_service.CREATE_SERVICE(SERVICE_NAME=>'*******_APP', NETWORK_NAME=>'********_APP')

exec dbms_service.CREATE_SERVICE(SERVICE_NAME=>'*********_DBA', NETWORK_NAME=>'********_DBA')

exec dbms_service.START_SERVICE('**********_APP')

exec dbms_service.START_SERVICE('**********_DBA')

exec dbms_service.CREATE_SERVICE(SERVICE_NAME=>'*********_DBA', NETWORK_NAME=>'********_DBA')

exec dbms_service.START_SERVICE('**********_APP')

exec dbms_service.START_SERVICE('**********_DBA')

Friday, February 24, 2012

kill session in RAC

Login to all the nodes/machines and try to query :

select sid, serial# from v$session where sid='@@@';

kill the session :

alter system kill session 'sid,serial#' immediate;

select status from v$session where sid='@@@';

If the status says INVALID then ignore, else

while killing the session, if the status says "ORA-00031: session marked for kill", the status will show "killed". In this case, try to kill the session in the OS.

Run the below query to get the spid along with other useful details.

SET LINESIZE 100

COLUMN spid FORMAT A10

COLUMN username FORMAT A10

COLUMN program FORMAT A45

SELECT s.inst_id,

s.sid,

s.serial#,

p.spid,

s.username,

s.program

FROM gv$session s

JOIN gv$process p ON p.addr = s.paddr AND p.inst_id = s.inst_id

WHERE s.type != 'BACKGROUND';

Get the spid for the appropriate sid and in the OS level, issue the following command.

kill -9 spid

Sometimes, you would get the below error :

ORA-00027 cannot kill current session

In this case, make sure you and the users have logged out of the session.

select sid, serial# from v$session where sid='@@@';

kill the session :

alter system kill session 'sid,serial#' immediate;

select status from v$session where sid='@@@';

If the status says INVALID then ignore, else

while killing the session, if the status says "ORA-00031: session marked for kill", the status will show "killed". In this case, try to kill the session in the OS.

Run the below query to get the spid along with other useful details.

SET LINESIZE 100

COLUMN spid FORMAT A10

COLUMN username FORMAT A10

COLUMN program FORMAT A45

SELECT s.inst_id,

s.sid,

s.serial#,

p.spid,

s.username,

s.program

FROM gv$session s

JOIN gv$process p ON p.addr = s.paddr AND p.inst_id = s.inst_id

WHERE s.type != 'BACKGROUND';

Get the spid for the appropriate sid and in the OS level, issue the following command.

kill -9 spid

Sometimes, you would get the below error :

ORA-00027 cannot kill current session

In this case, make sure you and the users have logged out of the session.

Tuesday, February 21, 2012

alert log file

SQL>show parameter background dump dest;

In OS prompt, go to the location, you can find the alert log file named something similar to the one below :

alert_instancename.log

Open it with either more or tail command.

In OS prompt, go to the location, you can find the alert log file named something similar to the one below :

alert_instancename.log

Open it with either more or tail command.

read trace file

SQL>show parameter background dump dest;

In OS prompt, go to the location.

you will find .trc and .trm files which are not in human readable format. To read the trace file, do the following.

$tkprof .trc filename

The output file will be created with .prf

open the .prf file using more or tail command.

In OS prompt, go to the location.

you will find .trc and .trm files which are not in human readable format. To read the trace file, do the following.

$tkprof .trc filename

The output file will be created with .prf

open the .prf file using more or tail command.

Sunday, February 19, 2012

check whether a database is primary or standby

SQL> select DATABASE_ROLE from v$database;

DATABASE_ROLE

-------------------------------

PRIMARY

SQL> select DATABASE_ROLE from v$database;

DATABASE_ROLE

-------------------------------

PHYSICAL STANDBY

DATABASE_ROLE

-------------------------------

PRIMARY

SQL> select DATABASE_ROLE from v$database;

DATABASE_ROLE

-------------------------------

PHYSICAL STANDBY

Saturday, February 18, 2012

uptime command

uptime

The uptime command prints the current time, the length of time the system has been up, the number of users online, and the load average. The load average is the number of runnable processes over the preceding 1-, 5-, 15-minute intervals. The output of the uptime command is, essentially, the heading line provided by the w command.

Example

8:11pm up 6 day(s), 16:09, 1 user, load average: 0.23, 0.25, 0.24

The uptime command prints the current time, the length of time the system has been up, the number of users online, and the load average. The load average is the number of runnable processes over the preceding 1-, 5-, 15-minute intervals. The output of the uptime command is, essentially, the heading line provided by the w command.

Example

8:11pm up 6 day(s), 16:09, 1 user, load average: 0.23, 0.25, 0.24

Friday, February 17, 2012

free space and used space in a database

free space

----------

SQL> SELECT sum(bytes)/1024/1024/1024 "free space in GB"

2 FROM dba_free_space;

used space

----------

SQL> SELECT sum(bytes)/1024/1024/1024 "used space in GB"

2 FROM dba_segments

3 /

----------

SQL> SELECT sum(bytes)/1024/1024/1024 "free space in GB"

2 FROM dba_free_space;

used space

----------

SQL> SELECT sum(bytes)/1024/1024/1024 "used space in GB"

2 FROM dba_segments

3 /

flush shared pool

SELECT PROPNAME, PROPVALUE FROM SERVER_CONFIG_PROPERTIES;

Normally this query returns several rows of data for a user. However, when the bug is in affect it generates this error: “ORA-00942: table or view does not exist”

To fix this issue please login to the database as the sys user and flush the shared pool using this command:

alter system flush shared_pool;

Run the above command once or twice as per the user's requirement.

Normally this query returns several rows of data for a user. However, when the bug is in affect it generates this error: “ORA-00942: table or view does not exist”

To fix this issue please login to the database as the sys user and flush the shared pool using this command:

alter system flush shared_pool;

Run the above command once or twice as per the user's requirement.

Tuesday, February 14, 2012

spikes

This image looks as if there is a spike but actually the database is looking good.

This image is the 7 day view which clearly shows that there is no spike. This image and the one before this belong to the same database during same period. This looks no different than any other day.

These are the spikes which are worrisome.

This image is the 7 day view which clearly shows that there is no spike. This image and the one before this belong to the same database during same period. This looks no different than any other day.

These are the spikes which are worrisome.

Monday, February 13, 2012

row level contention or lock

SELECT sid, sql_text

FROM v$session s

LEFT JOIN v$sql q ON q.sql_id=s.sql_id

WHERE state = 'WAITING' AND wait_class != 'Idle'

AND event = 'enq: TX - row lock contention';

FROM v$session s

LEFT JOIN v$sql q ON q.sql_id=s.sql_id

WHERE state = 'WAITING' AND wait_class != 'Idle'

AND event = 'enq: TX - row lock contention';

Saturday, February 4, 2012

Wednesday, January 25, 2012

memory

In the unix command prompt,

>svmon -O summary=basic,unit=auto

available column shows the memory available

we can kill the session in order to release some memory

How to get the username who is consuming more memory

------------------------------------------------------------------------------------

run the below query:

SELECT spid, program,

pga_max_mem max,

pga_alloc_mem alloc,

pga_used_mem used,

pga_freeable_mem free

FROM V$PROCESS

order by 3;

SQL> select spid,addr from v$process where spid='xxxxxxxx';

SQL> select sid,serial#,username,status,sql_id,machine from v$session

where paddr='xxxxxxxxxxx';

>svmon -O summary=basic,unit=auto

available column shows the memory available

we can kill the session in order to release some memory

How to get the username who is consuming more memory

------------------------------------------------------------------------------------

run the below query:

SELECT spid, program,

pga_max_mem max,

pga_alloc_mem alloc,

pga_used_mem used,

pga_freeable_mem free

FROM V$PROCESS

order by 3;

SQL> select spid,addr from v$process where spid='xxxxxxxx';

SQL> select sid,serial#,username,status,sql_id,machine from v$session

where paddr='xxxxxxxxxxx';

Monday, January 23, 2012

history of sql commands

To track the sql commands, we can either check in OEM grid control or AWR report.

blocking session

select sid, username, serial#, process, nvl(sql_id, 0), sql_address, blocking_session, wait_class, event, p1, p2, p3, seconds_in_wait from v$session where blocking_session_status = 'VALID' OR sid IN (select blocking_session from v$session where blocking_session_status = 'VALID');

another query

--------------------------

select l1.sid, ' IS BLOCKING ', l2.sid

from v$lock l1, v$lock l2

where l1.block =1 and l2.request > 0

and l1.id1=l2.id1

and l1.id2=l2.id2

/

The output will be something like the below

SID 'ISBLOCKING' SID

--- ------------- ------

422 IS BLOCKING 479

Another query

------------------------

select s1.username || '@' || s1.machine

|| ' ( SID=' || s1.sid || ' ) is blocking '

|| s2.username || '@' || s2.machine || ' ( SID=' || s2.sid || ' ) ' AS blocking_status

from v$lock l1, v$session s1, v$lock l2, v$session s2

where s1.sid=l1.sid and s2.sid=l2.sid

and l1.BLOCK=1 and l2.request > 0

and l1.id1 = l2.id1

and l2.id2 = l2.id2 ;

The output will be like this :

BLOCKING_STATUS

-----------------------------------------------------------------------

BULKLOAD@yttrium ( SID=422 ) is blocking BULKLOAD@yttrium ( SID=479 )

another query

--------------------------

select l1.sid, ' IS BLOCKING ', l2.sid

from v$lock l1, v$lock l2

where l1.block =1 and l2.request > 0

and l1.id1=l2.id1

and l1.id2=l2.id2

/

The output will be something like the below

SID 'ISBLOCKING' SID

--- ------------- ------

422 IS BLOCKING 479

Another query

------------------------

select s1.username || '@' || s1.machine

|| ' ( SID=' || s1.sid || ' ) is blocking '

|| s2.username || '@' || s2.machine || ' ( SID=' || s2.sid || ' ) ' AS blocking_status

from v$lock l1, v$session s1, v$lock l2, v$session s2

where s1.sid=l1.sid and s2.sid=l2.sid

and l1.BLOCK=1 and l2.request > 0

and l1.id1 = l2.id1

and l2.id2 = l2.id2 ;

The output will be like this :

BLOCKING_STATUS

-----------------------------------------------------------------------

BULKLOAD@yttrium ( SID=422 ) is blocking BULKLOAD@yttrium ( SID=479 )

compress all the archive logs at one time

go to the location where archive logs are present

compress *.arc

compress *.arc

Tuesday, January 17, 2012

gather stats on index

SQL> Execute DBMS_STATS.GATHER_INDEX_STATS ('schemaname','indexname');

PL/SQL procedure successfully completed.

PL/SQL procedure successfully completed.

Monday, January 16, 2012

schema refresh using conventional export/import (schemas and tablespaces are different)

In source database

exp system/password owner=ownername file=dumpfilename.dmp compress=n statistics=none consistent=y buffer=1024000 log=logfilename

transfer the dump file from source to destination

In the destination database,

create the structure of tablespace and schema

imp system/password fromuser= touser= file=dumpfile statistics=none buffer=1024000

log=logfilename ignore=y

exp system/password owner=ownername file=dumpfilename.dmp compress=n statistics=none consistent=y buffer=1024000 log=logfilename

transfer the dump file from source to destination

In the destination database,

create the structure of tablespace and schema

imp system/password fromuser= touser= file=dumpfile statistics=none buffer=1024000

log=logfilename ignore=y

schema refresh using datapump (schema names and tablespace names are different)

do the export in source db

export ORACLE_SID=

export ORACLE_HOME=

export PATH=$ORACLE_HOME/bin

expdp system/password schemas=schemaname directory=directoryname parallel=2 dumpfile=dumpfilename_%U.dmp log=logfilename

transfer the dump file from source to destination database

In the destination database,

create the tablespace and the schema structure

export ORACLE_SID=

export ORACLE_HOME=

export PATH=$ORACLE_HOME/bin

impdp system/password remap_tablespace=sourcetablespacename:destinationtablespacename remap_schema=sourceschemaname:destinationschemaname table_exists_action=truncate directory=directoryname parallel=2 dumpfile=dumpfilenames log=logfilename

~

export ORACLE_SID=

export ORACLE_HOME=

export PATH=$ORACLE_HOME/bin

expdp system/password schemas=schemaname directory=directoryname parallel=2 dumpfile=dumpfilename_%U.dmp log=logfilename

transfer the dump file from source to destination database

In the destination database,

create the tablespace and the schema structure

export ORACLE_SID=

export ORACLE_HOME=

export PATH=$ORACLE_HOME/bin

impdp system/password remap_tablespace=sourcetablespacename:destinationtablespacename remap_schema=sourceschemaname:destinationschemaname table_exists_action=truncate directory=directoryname parallel=2 dumpfile=dumpfilenames log=logfilename

~

Sunday, January 15, 2012

add database to OEM Grid control

all targets

add database instance

It will ask for the host name, provide it

continue

automatically pulls up the databases present in the host

manually add

add database instance

It will ask for the host name, provide it

continue

automatically pulls up the databases present in the host

manually add

Thursday, January 12, 2012

reclaim the space in the database temporarily

remove the temp and undo tablespaces and create new ones and point the database to the new tablespaces so that we can reclaim some space in the database until temp and undo get fill up.

reclaim from undo tbs

-----------------------------

CREATE UNDO TABLESPACE undotbs2 DATAFILE '/u01/app/oracle/oradata/DB11G/undotbs02.dbf' SIZE 2G AUTOEXTEND ON NEXT 1M;

ALTER SYSTEM SET UNDO_TABLESPACE=undotbs2;

DROP TABLESPACE undotbs1 INCLUDING CONTENTS AND DATAFILES;

reclaim from temp tbs

-----------------------------

CREATE TEMPORARY TABLESPACE temp2 TEMPFILE '/u01/app/oracle/oradata/DB11G/temp02.dbf' SIZE 2G AUTOEXTEND ON NEXT 1M;

ALTER DATABASE DEFAULT TEMPORARY TABLESPACE temp2;

-- Switch all existing users to new temp tablespace.

BEGIN

FOR cur_user IN (SELECT username FROM dba_users WHERE temporary_tablespace = 'TEMP') LOOP

EXECUTE IMMEDIATE 'ALTER USER ' || cur_user.username || ' TEMPORARY TABLESPACE temp2';

END LOOP;

END;

/

DROP TABLESPACE temp INCLUDING CONTENTS AND DATAFILES; --> This command may take a long time. Follow this :

SELECT b.tablespace,b.segfile#,b.segblk#,b.blocks,a.sid,a.serial#,

a.username,a.osuser, a.status

FROM v$session a,v$sort_usage b

WHERE a.saddr = b.session_addr;

alter system kill session 'SID_NUMBER, SERIAL#NUMBER';

reclaim from undo tbs

-----------------------------

CREATE UNDO TABLESPACE undotbs2 DATAFILE '/u01/app/oracle/oradata/DB11G/undotbs02.dbf' SIZE 2G AUTOEXTEND ON NEXT 1M;

ALTER SYSTEM SET UNDO_TABLESPACE=undotbs2;

DROP TABLESPACE undotbs1 INCLUDING CONTENTS AND DATAFILES;

reclaim from temp tbs

-----------------------------

CREATE TEMPORARY TABLESPACE temp2 TEMPFILE '/u01/app/oracle/oradata/DB11G/temp02.dbf' SIZE 2G AUTOEXTEND ON NEXT 1M;

ALTER DATABASE DEFAULT TEMPORARY TABLESPACE temp2;

-- Switch all existing users to new temp tablespace.

BEGIN

FOR cur_user IN (SELECT username FROM dba_users WHERE temporary_tablespace = 'TEMP') LOOP

EXECUTE IMMEDIATE 'ALTER USER ' || cur_user.username || ' TEMPORARY TABLESPACE temp2';

END LOOP;

END;

/

DROP TABLESPACE temp INCLUDING CONTENTS AND DATAFILES; --> This command may take a long time. Follow this :

SELECT b.tablespace,b.segfile#,b.segblk#,b.blocks,a.sid,a.serial#,

a.username,a.osuser, a.status

FROM v$session a,v$sort_usage b

WHERE a.saddr = b.session_addr;

alter system kill session 'SID_NUMBER, SERIAL#NUMBER';

Wednesday, January 11, 2012

filesystem fills up

1) Unwanted files can be removed (old files)

2) Shrink the datafiles

3) turn all the datafiles in to autoextend off and create a datafile for each tablespace with autoextend on to another filesystem which has more space

4) move the dump (dmp) files from the filled up filesystem to another filesystem which has some space.

2) Shrink the datafiles

3) turn all the datafiles in to autoextend off and create a datafile for each tablespace with autoextend on to another filesystem which has more space

4) move the dump (dmp) files from the filled up filesystem to another filesystem which has some space.

EXP-00105: parameter CONSISTENT is not supported for this user

we cannot do export with sys user if we need to use the consistent=y parameter.

we get this error.

EXP-00105: parameter CONSISTENT is not supported for this user

use another user to export.

we get this error.

EXP-00105: parameter CONSISTENT is not supported for this user

use another user to export.

table refresh using conventional export/import

Check for the space in the filesystem.

Take backup of the table(s) in the source database.

vi exp.par

--------------

tables=tablename

file=dumpfilename

log=logfilename

compress=n

statistics=none

consistent=y

buffer=1024000

transfer the dumpfile from source to destination database

In the destination database,

drop the table in the destination database

check for the space in the filesystem and the destination database (tablespace).

import the table to the destination database

vi imp.par

---------------

file=dumpfile

log=logfile

fromuser=user of source database

tables=(tab1, tab2, etc.,)

rows=y

grants=y

buffer=1024000

statistics=none

Take backup of the table(s) in the source database.

vi exp.par

--------------

tables=tablename

file=dumpfilename

log=logfilename

compress=n

statistics=none

consistent=y

buffer=1024000

transfer the dumpfile from source to destination database

In the destination database,

drop the table in the destination database

check for the space in the filesystem and the destination database (tablespace).

import the table to the destination database

vi imp.par

---------------

file=dumpfile

log=logfile

fromuser=user of source database

tables=(tab1, tab2, etc.,)

rows=y

grants=y

buffer=1024000

statistics=none

schema refresh using conventional export/import

Check for the space in both the filesystem as well as in the database.

Take backup of the schema in the source database.

vi exp.par

--------------

owner=schemaname

file=dumpfilename

log=logfilename

compress=n

statistics=none

consistent=y

buffer=1024000

transfer the dumpfile from source to destination database

In the destination database,

drop the schema

create the schema from the source database

coalesce the tablespace

import the schema to the destination database

vi imp.par

---------------

file=dumpfile

log=logfile

fromuser=schema in the source database

touser=schema in the destination database

rows=y

indexes=y

grants=y

buffer=1024000

statistics=none

ignore=y

Take backup of the schema in the source database.

vi exp.par

--------------

owner=schemaname

file=dumpfilename

log=logfilename

compress=n

statistics=none

consistent=y

buffer=1024000

transfer the dumpfile from source to destination database

In the destination database,

drop the schema

create the schema from the source database

coalesce the tablespace

import the schema to the destination database

vi imp.par

---------------

file=dumpfile

log=logfile

fromuser=schema in the source database

touser=schema in the destination database

rows=y

indexes=y

grants=y

buffer=1024000

statistics=none

ignore=y

Saturday, January 7, 2012

full database refresh using conventional export/import

backup the source database and restore it in the destination database

take backup of source database

use below parameters

full=y

file=dumpfilename

log=logfilename

consistent=y

buffer=1024000

statistics=none

compress=n

scp the dump file from source to destination server

In the destination database, you need to have space in both the filesystem and the database. In the filesystem, it is enough if you have 1/3 rd or may be 1/4 th of the total source database size as it is only the raw data that resides in the filesystem.

Note down the datafile locations for the tablespaces

remove all the non default users, roles, profiles and the non-default tablespaces including the contents and datafiles.

remove the datafiles physically.

create the tablespaces from the source database

create roles and profiles

create users

vi imp.par

-----------

file=dumpfile

full=y

buffer=1024000

log=logfile

statistics=none

ignore=y

commit=y

take backup of source database

use below parameters

full=y

file=dumpfilename

log=logfilename

consistent=y

buffer=1024000

statistics=none

compress=n

scp the dump file from source to destination server

In the destination database, you need to have space in both the filesystem and the database. In the filesystem, it is enough if you have 1/3 rd or may be 1/4 th of the total source database size as it is only the raw data that resides in the filesystem.

Note down the datafile locations for the tablespaces

remove all the non default users, roles, profiles and the non-default tablespaces including the contents and datafiles.

remove the datafiles physically.

create the tablespaces from the source database

create roles and profiles

create users

vi imp.par

-----------

file=dumpfile

full=y

buffer=1024000

log=logfile

statistics=none

ignore=y

commit=y

export/import using sys user

exp \'/ as sysdba\' parfile=exp.par

as sys user will have all the necessary privileges.

as sys user will have all the necessary privileges.

contents of disk groups in asm

ls

This will list the databases which reside in that particular disk group

you can do cd to that disk group and say ls again. It will show the databases.

If you further do cd to the database, it will show the database files like control file, logfile, datafile, tempfile, parameter file, etc.

If you want to remove a file, you can say rm filename.

This will list the databases which reside in that particular disk group

you can do cd to that disk group and say ls again. It will show the databases.

If you further do cd to the database, it will show the database files like control file, logfile, datafile, tempfile, parameter file, etc.

If you want to remove a file, you can say rm filename.

Wednesday, January 4, 2012

Clean up old files(before 5 days)

find location of archive log files -name "*.ARC" -mtime +5 -exec rm {} \;

(or)

usr/bin/find location of archive log files -name "*.ARC" -mtime +5 -exec rm {} \;

same for trace files(.trc & .trm), .aud files

(or)

usr/bin/find location of archive log files -name "*.ARC" -mtime +5 -exec rm {} \;

same for trace files(.trc & .trm), .aud files

check whether the agent is running or not

ps -ef grep emagent

it will tell the location where the agent is running.

go to that location and run this command,

./emctl status agent

if it says "Agent is Running and Ready"

it means the agent is up and running.

If it is not running just give this command,

./emctl start agent

To stop the agent running, give this command,

./emctl stop agent

it will tell the location where the agent is running.

go to that location and run this command,

./emctl status agent

if it says "Agent is Running and Ready"

it means the agent is up and running.

If it is not running just give this command,

./emctl start agent

To stop the agent running, give this command,

./emctl stop agent

Tuesday, January 3, 2012

exp and expdp mappings

first is exp

second is expdp

BUFFER-NOT NEEDED

COMPRESS-NOT NEEDED

CONSISTENT-NOT NEEDED

CONSTRAINTS-EXCLUDE=CONSTRAINT

DIRECT-NOT NEEDED

FEEDBACK-STATUS

FILE-DUMPFILE

GRANTS-EXCLUDE=GRANT

INDEXES-EXCLUDE=INDEX

LOG-LOGFILE

OBJECT_CONSISTENT-NOT NEEDED

OWNER-SCHEMAS

RECORDLENGTH-NOT NEEDED

RESUMABLE-NOT NEEDED

RESUMABLE_NAME-NOT NEEDED

RESUMABLE_TIMEOUT-NOT NEEDED

ROWS=N-CONTENT=METADATA_ONLY

ROWS=Y-CONTENT=ALL

STATISTICS-NOT NEEDED

TRIGGERS-EXCLUDE=TRIGGER

TTS_FULL_CHECK-TRANSPORT_FULL_CHECK

IGNORE=Y-TABLE_EXISTS_ACTION=APPEND

second is expdp

BUFFER-NOT NEEDED

COMPRESS-NOT NEEDED

CONSISTENT-NOT NEEDED

CONSTRAINTS-EXCLUDE=CONSTRAINT

DIRECT-NOT NEEDED

FEEDBACK-STATUS

FILE-DUMPFILE

GRANTS-EXCLUDE=GRANT

INDEXES-EXCLUDE=INDEX

LOG-LOGFILE

OBJECT_CONSISTENT-NOT NEEDED

OWNER-SCHEMAS

RECORDLENGTH-NOT NEEDED

RESUMABLE-NOT NEEDED

RESUMABLE_NAME-NOT NEEDED

RESUMABLE_TIMEOUT-NOT NEEDED

ROWS=N-CONTENT=METADATA_ONLY

ROWS=Y-CONTENT=ALL

STATISTICS-NOT NEEDED

TRIGGERS-EXCLUDE=TRIGGER

TTS_FULL_CHECK-TRANSPORT_FULL_CHECK

IGNORE=Y-TABLE_EXISTS_ACTION=APPEND

dbms_scheduler

create and enable the job

------------------------------------------------

SQL> execute sys.dbms_scheduler.create_job(job_name=>'"user"."Jobname"', job_type=>'EXECUTABLE', job_action=>'complete name and location of the script file', repeat_interval=>'FREQ=WEEKLY;BYDAY=MON,TUE,WED,THU,FRI;BYHOUR=07;BYMINUTE=01;BYSECOND=1', job_class=>'"jobgroupname"', number_of_arguments=>'1', auto_drop=>FALSE, enabled=>FALSE);

PL/SQL procedure successfully completed.

SQL> execute sys.dbms_scheduler.set_job_argument_value( job_name => '"user"."jobname"', argument_position => 1, argument_value => 'value');

PL/SQL procedure successfully completed.

SQL> execute sys.dbms_scheduler.enable( '"user". "jobname"' );

PL/SQL procedure successfully completed.

drop the job

--------------------------

SQL> EXEC DBMS_SCHEDULER.DROP_JOB('user.jobname');

PL/SQL procedure successfully completed.

------------------------------------------------

SQL> execute sys.dbms_scheduler.create_job(job_name=>'"user"."Jobname"', job_type=>'EXECUTABLE', job_action=>'complete name and location of the script file', repeat_interval=>'FREQ=WEEKLY;BYDAY=MON,TUE,WED,THU,FRI;BYHOUR=07;BYMINUTE=01;BYSECOND=1', job_class=>'"jobgroupname"', number_of_arguments=>'1', auto_drop=>FALSE, enabled=>FALSE);

PL/SQL procedure successfully completed.

SQL> execute sys.dbms_scheduler.set_job_argument_value( job_name => '"user"."jobname"', argument_position => 1, argument_value => 'value');

PL/SQL procedure successfully completed.

SQL> execute sys.dbms_scheduler.enable( '"user". "jobname"' );

PL/SQL procedure successfully completed.

drop the job

--------------------------

SQL> EXEC DBMS_SCHEDULER.DROP_JOB('user.jobname');

PL/SQL procedure successfully completed.

sql consuming high cpu

go to OEM grid

target

database

performance tab

top activity

Keep the cursor on the spike (there should be a major difference in the graph)

click on the SQL ID

get the SID.

use this command to get the serial# and the user

SQL> SELECT SID, SERIAL#, USERNAME FROM V$SESSION where SID='797';

kill the session (if the user requests)

If you want to go back far, you can click on top activity & choose historical and choose the date and time

target

database

performance tab

top activity

Keep the cursor on the spike (there should be a major difference in the graph)

click on the SQL ID

get the SID.

use this command to get the serial# and the user

SQL> SELECT SID, SERIAL#, USERNAME FROM V$SESSION where SID='797';

kill the session (if the user requests)

If you want to go back far, you can click on top activity & choose historical and choose the date and time

Monday, January 2, 2012

enable/disable archive log in oracle 10g/11g

Enable

----------

SQL> archive log list

SQL> shutdown immediate

SQL> startup mount

SQL> alter database archivelog;

SQL> alter database open;

SQL> archive log list

Disable

----------

SQL> archive log list;

SQL> shutdown immediate

SQL> startup mount

SQL> alter database noarchivelog;

SQL> alter database open;

SQL> archive log list;

----------

SQL> archive log list

SQL> shutdown immediate

SQL> startup mount

SQL> alter database archivelog;

SQL> alter database open;

SQL> archive log list

Disable

----------

SQL> archive log list;

SQL> shutdown immediate

SQL> startup mount

SQL> alter database noarchivelog;

SQL> alter database open;

SQL> archive log list;

Sunday, January 1, 2012

kill datapump job

If you use ctrl+c, the job won't get killed. It will continue to run in the background.

SQL> select job_name from dba_datapump_jobs;

result

$ expdp USERNAME/PASSWORD attach=result

EXPORT>KILL_JOB

Are you sure you want to kill the job(yes or no)? Yes

If the status of the job is in 'not running', you will not be able to kill the job by the above said method.

drop table job_name;-> will drop the job successfully.

SQL> select job_name from dba_datapump_jobs;

result

$ expdp USERNAME/PASSWORD attach=result

EXPORT>KILL_JOB

Are you sure you want to kill the job(yes or no)? Yes

If the status of the job is in 'not running', you will not be able to kill the job by the above said method.

drop table job_name;-> will drop the job successfully.

table export/import using datapump

export

--------

#!/bin/ksh

TNS_ADMIN=fill it

export TNS_ADMIN

ORACLE_HOME=fill it

export ORACLE_HOME

PATH=$ORACLE_HOME/bin:$PATH:.

export PATH

export DATE=`date +"%m-%d-%Y"`

ORACLE_SID=dbname

export ORACLE_SID

cd location

expdp user/password directory=directoryname parallel=decide as per cpu dumpfile=table_export_dbname_$date_%U.dmp logfile=table_export_dbname_$date.log

tables=all the table names along with schema names

gzip location/*.dmp

exit 0

Import

---------

#!/bin/ksh

TNS_ADMIN=fill it

export TNS_ADMIN

ORACLE_HOME=fill it

export ORACLE_HOME

PATH=$ORACLE_HOME/bin:$PATH:.

export PATH

export DATE=`date +"%m-%d-%Y"`

ORACLE_SID=dbname

export ORACLE_SID

cd location

gunzip location/*.gz

expdp user/password directory=directoryname parallel=decide as per cpu dumpfile=table_export_dbname_$date_%U.dmp logfile=table_import_dbname_$date.log tables=all the table names along with schema names

exit 0

--------

#!/bin/ksh

TNS_ADMIN=fill it

export TNS_ADMIN

ORACLE_HOME=fill it

export ORACLE_HOME

PATH=$ORACLE_HOME/bin:$PATH:.

export PATH

export DATE=`date +"%m-%d-%Y"`

ORACLE_SID=dbname

export ORACLE_SID

cd location

expdp user/password directory=directoryname parallel=decide as per cpu dumpfile=table_export_dbname_$date_%U.dmp logfile=table_export_dbname_$date.log

tables=all the table names along with schema names

gzip location/*.dmp

exit 0

Import

---------

#!/bin/ksh

TNS_ADMIN=fill it

export TNS_ADMIN

ORACLE_HOME=fill it

export ORACLE_HOME

PATH=$ORACLE_HOME/bin:$PATH:.

export PATH

export DATE=`date +"%m-%d-%Y"`

ORACLE_SID=dbname

export ORACLE_SID

cd location

gunzip location/*.gz

expdp user/password directory=directoryname parallel=decide as per cpu dumpfile=table_export_dbname_$date_%U.dmp logfile=table_import_dbname_$date.log tables=all the table names along with schema names

exit 0

Subscribe to:

Comments (Atom)